An inference engine for RDF

Master thesis

G.Naudts

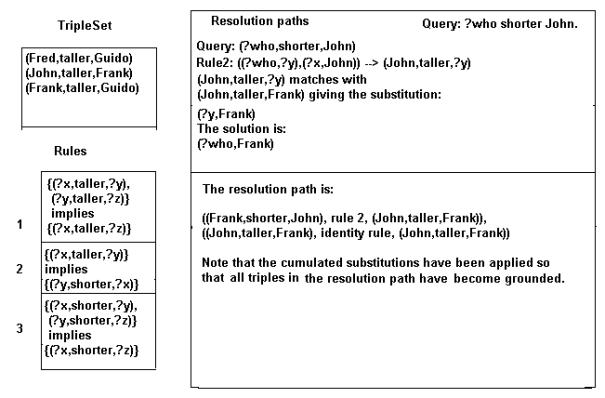

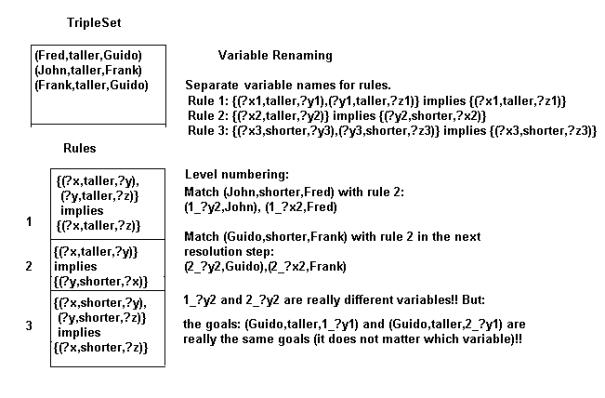

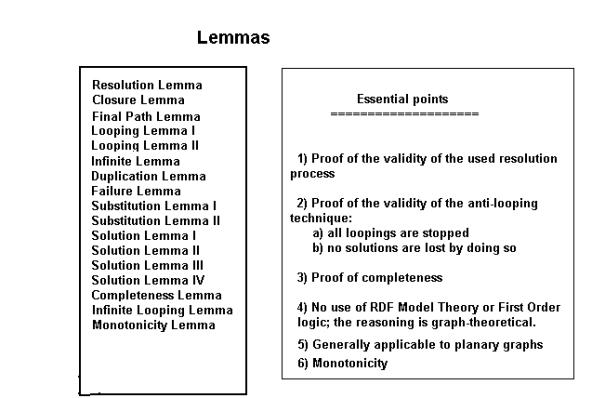

831708907

22

augustus 2003

Open University Netherlands

Agfa Gevaert

This document is the Master Thesis made as a part of

my Master Degree in Computer Science Education (Software Systems) at the Open

University of the Netherlands. The work has been done in collaboration with the

research department of the company Agfa in Mortsel Belgium.

Student

data

Name Guido Naudts

Student number 831708907

Address Secretarisdreef 5

2288 Bouwel

Telephone work 0030-2-542.76.01

Home 0030-14-51.32.43

E-mail Naudts_Vannoten@yahoo.com

Coaching

and graduation committee

Chairman: dr J.T. Jeuring, professor at the Open

University

Secretary : ir. F.J. Wester, senior lecturer at the Open

University

Coach: ir. J. De Roo, researcher at Agfa

Gevaert.

Acknowledgements

I want to thank Ir.J.De Roo for giving me the opportunity for making a thesis about a tremendous subject and his guidance in the matter.

Pr.J.Jeuring and Ir. F.Wester are thanked for their efforts to help me produce a readable and valuable text.

I thank M.P.Jones for his Prolog demo in the Hugs distribution that has very much helped me.

I thank all the people from the OU for their efforts during the years without which this work would not have been possible.

I thank my wife and children for supporting a father seated behind his computer during many years.

Coaching and graduation

committee

1.2.3.Conclusions of the case study

1.6. Relation with the current research

Chapter

2. The building blocks

2.4.Resource Description Framework RDF

2.7.2. The model theory of RDF

2.8. A global view of the Semantic Web

2.8.2. The layers of the Semantic Web

Chapter

3. RDF, RDFEngine and inferencing.

3.2.Recapitulation and definitions

3.4.Languages needed for inferencing

3.5.The model interpretation of a rule

3.14.Comparison of resolution and closure

paths

3.17.A formal theory of graph resolution

3.18.An extension of the theory to variable

arities

3.19.An

extension of the theory to typed nodes and arcs

Chapter

4. Existing software systems

Chapter 5. RDF, inferencing

and logic.

5.2.3. A problem with reification

5.3.Unification

and the RDF graph model

5.4.Completeness and soundness of the engine

5.7. Comparison with Prolog and Otter

5.7.1. Comparison of Prolog with RDFProlog

5.8.Differences

between RDF and FOL

5.11. RDF and constructive logic

5.12.

The Semantic Web and logic

5.15.The

World Wide Web and neural networks

6.3. An optimization technique

7.3. OWL Lite and inconsistencies

7.5. Reactions to inconsistencies and trust

7.5.1.Reactions to inconsistency

7.5.2.Reactions to a lack of trust

8.4. Searching paths in a graph

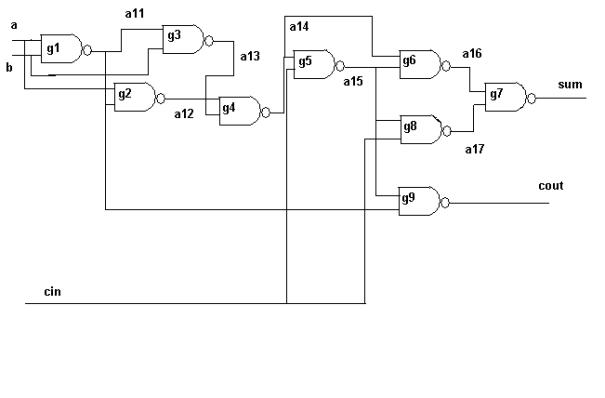

8.6. Simulation of logic gates.

8.8. A simulation of a flight reservation

9.2.Characteristics

of an inference engine

9.3. An evaluation of the RDFEngine

9.4. Forward versus backwards reasoning

Appendix

1: Excuting the engine

Appendix 2. Example

of a closure path.

Simulation

of a flight reservation

Appendix

4. Theorem provers an overview

2.2 Reasoning using resolution techniques: see

the chapter on resolution.

2.5. The matrix connection method

Higher order interactive provers

Overview of different logic systems

3. First order predicate logic

5.

Modal logic (temporal logic)

6. Intuistionistic or constructive logic

Appendix

5. The source code of RDFEngine

Appendix

6. The handling of variables

Summary

The Semantic Web is

an initiative of the World Wide Web

Consortium (W3C). The goal of this project is to define a series of

standards. These standards will create a framework for the automation of

activities on the World Wide Web

(WWW) that still need manual intervention by human beings at this moment.

A certain number of basic standards have been

developed already. Among them XML is without doubt the most known. Less known

is the Resource Description Framework

(RDF). This is a standard for the creation of meta information. This is the

information that has to be given to computers to permit them to handle tasks

previously done by human beings.

Information alone however is not sufficient. In order

to take a decision it is often necessary to follow a certain reasoning.

Reasoning is connected with logics. The consequence is that computers have to

be fed with programs and rules that can take decisions, following a certain

logic, based on available information.

These programs and reasonings are executed by inference engines. The definition of

inference engines for the Semantic Web is at the moment an active field of

experimental research.

These engines have

to use information that is expressed following the standard RDF. The

consequences of this requirement for the definition of the engine are explored

in this thesis.

An inference engine

uses information and, given a query,

reasons with this information with a solution

as a result. It is important to be able to show that the solutions, obtained in

this manner, have certain characteristics. These are, between others, the

characteristics completeness and validity. This proof is delivered in

this thesis in two ways:

1)

by means of a

reasoning based on the graph theoretical properties of RDF

2)

by means of a

model based on First Order Logics.

On the other hand these engines must take into account

the specificity of the World Wide Web. One of the properties of the web is the

fact that sets are not closed. This implies that not all elements of a set are

known. Reasoning then has to happen in an open

world. This fact has far reaching logical

implications.

During the twentieth century there has been an

impetous development of logic systems. More than 2000 kinds of logic were

developed. Notably First Order Logic (FOL)

has been under heavy attack, beside others, by the dutch Brouwer with the constructive or intuistionistic logic. Research is also done into kinds of logic

that are suitable for usage by machines.

In this thesis I argue that an inference engine

for the World Wide Web should follow a kind of constructive logic and that RDF,

with a logical implication added,

satisfies this requirement. It is shown that a constructive approach is

important for the verifiability of the results of inferencing.

The structure of the World Wide Web has a

number of consequences for the properties that an inference engine should

satisfy. A list of these properties is established and

discussed.

A query on the World Wide Web can extend over more

than one server. This means that a process of distributed inferencing must be

defined. This concept is introduced in an experimental inference engine,

RDFEngine.

An important characteristic for an engine that has to

be active in the World Wide Web is efficiency.

Therefore it is important to do research about the methods that can be used to

make an efficient engine. The structure has to be such that it is possible to

work with huge volumes of data. Eventually these data are kept in relational

databases.

On the World Wide Web there is information available

in a lot of places and in a lot of different forms. Joining parts of this

information and reasoning about the result can easily lead to the existence of contradictions and inconsistencies. These are inherent to the used logic or are

dependable on the application. A special place is taken by inconsistencies that

are inherent to the used ontology. For the Semantic Web the ontology is

determined by the standards rdfs and OWL.

An ontology introduces a classification of data and apllies restrictions to those data. An inference engine for the Semantic Web needs to have such characteristics that it is compatible with rdfs and OWL.

An executable specification of an inference engine in Haskell was constructed. This permitted to test different aspects of inferencing and the logic connected to it. This engine has the name RDFEngine. A large number of existing testcases was executed with the engine. A certain number of testcases was constructed, some of them for inspecting the logic characteristics of RDF.

Samenvatting

Het Semantisch

Web is een initiatief van het World Wide

Web Consortium (W3C). Dit project beoogt het uitbouwen van een reeks van

standaarden. Deze standaarden zullen een framework scheppen voor de

automatisering van activiteiten op het World

Wide Web (WWW) die thans nog manuele tussenkomst vereisen.

Een bepaald aantal basisstandaarden zijn reeds ontwikkeld waaronder XML

ongetwijfeld de meest gekende is. Iets minder bekend is het Resource Description Framework (RDF).

Dit is een standaard voor het creëeren van meta-informatie. Dit is de

informatie die aan de machines moet verschaft worden om hen toe te laten de

taken van de mens over te nemen.

Informatie alleen is echter niet voldoende. Om een beslissing te kunnen

nemen moet dikwijls een bepaalde redenering gevolgd worden. Redeneren heeft te

maken met logica. Het gevolg is dat computers gevoed moeten worden met

programmas en regels die, volgens een bepaalde logica, besluiten kunnen nemen

aan de hand van beschikbare informatie.

Deze programmas en redeneringen worden uitgevoerd door zogenaamde inference engines. Dit is op het huidig

ogenblik een actief terrein van experimenteel onderzoek.

De engines

moeten gebruik maken van de informatie die volgens de standaard RDF wordt

weergegeven. De gevolgen hiervan voor de structuur van de engine worden in deze

thesis nader onderzocht.

Een inference

engine gebruikt informatie en, aan de hand van een vraagstelling, worden redeneringen doorgevoerd op deze informatie

met een oplossing als resultaat. Het

is belangrijk te kunnen aantonen dat de oplossingen die aldus bekomen worden

bepaalde eigenschappen bezitten. Dit zijn onder meer de eigenschappen volledigheid en juistheid. Dit bewijs wordt op twee manieren geleverd: enerzijds

bij middel van een redenering gebaseerd op de graaf theoretische eigenschappen

van RDF; anders bij middel van een op First Order Logica gebaseerd model.

Anderzijds dienen zij rekening te houden met de specificiteit van het

World Wide Web. Een van de eigenschappen van het web is het open zijn van verzamelingen.

Dit houdt in dat van een verzameling niet alle elementen gekend zijn. Het

redeneren dient dan te gebeuren in een open

wereld. Dit heeft verstrekkende logische implicaties.

In de loop van de twintigste eeuw heeft de logica een stormachtige

ontwikkeling gekend. Meer dan 2000 soorten logica werden ontwikkeld. Aanvallen

werden doorgevoerd op het bolwerk van de First

Order Logica (FOL), onder meer door de nederlander Brouwer met de constructieve of intuistionistische logica. Gezocht wordt ook naar soorten logica

die geschikt zijn voor het gebruik door machines.

In deze thesis wordt betoogd dat een inference engine voor het WWW een

constructieve logica dient te volgen enerzijds, en anderzijds, dat RDF

aangevuld met een logische implicatie

aan dit soort logica voldoet. Het wordt aangetoond that een constructieve

benadering belangrijk is voor de verifieerbaarheid van de resultaten van de

inferencing.

De structuur van het World Wide Web heeft een aantal gevolgen voor de

eigenschappen waaraan een inference engine dient te voldoen. Een lijst van deze

eigenschappen wordt opgesteld en besproken.

Een query op het World Wide Web kan zich uitstrekken over meerdere

servers. Dit houdt in dat een proces van gedistribueerde inferencing moet

gedefinieerd worden. Dit concept wordt geïntroduceerd in een experimentele

inference engine, RDFEngine.

Een belangrijke eigenschap voor een engine die actief dient te zijn in

het World Wide Web is efficientie.

Het is daarom belangrijk te onderzoeken op welke wijze een efficiente engine

kan gemaakt worden. De structuur dient zodanig te zijn dat het mogelijk is om

met grote volumes aan data te werken, die eventueel in relationele databases

opgeslagen zijn.

Op het World Wide Web is informatie op vele plaatsen en in allerlei

vormen aanwezig. Het samen voegen van deze informatie en het houden van

redeneringen die erop betrekking hebben kan gemakkelijk leiden tot het ontstaan

van contradicties en inconsistenties. Deze zijn inherent aan

de gebruikte logica of zijn afhankelijk van de applicatie. Een speciale plaats

nemen de inconsistenties in die inherent zijn aan de gebruikt ontologie. Voor

het Semantisch Web wordt de ontologie bepaald door de standaarden rdfs en OWL. Een ontologie voert een classifiering in van gegevens en legt

ook beperkingen aan deze gegevens op. Een inference engine voor het Semantisch

Web dient zulkdanige eigenschappen te bezitten dat hij compatibel is met rdfs

en OWL.

Een uitvoerbare specificatie van een inference

engine in Haskell werd gemaakt. Dit liet toe om allerlei aspecten van

inferencing en de ermee verbonden logica te testen. De engine werd RDFEngine

gedoopt. Een groot aantal bestaande testcases werd onderzocht. Een aantal

testcases werd bijgemaakt, sommige speciaal met het oogmerk om de logische

eigenschappen van RDF te onderzoeken.

Chapter 1. Introduction

1.1. Overview

In chapter 1 a case study is given and then the

goals of the research, that is the subject of the thesis, are exposed with the

case study as illustration. The methods used are explained and an overview is

given of the fundamental knowledge upon which the research is based. The

relation with current research is indicated. Then the structure of the thesis

is outlined.

1.2. Case study

1.2.1. Introduction

A case study can serve as a guidance to what we want

to achieve with the Semantic Web. Whenever standards are approved they should

be such that important case studies remain possible to implement. Discussing all possible application fields here

would be out of scope. However one case study will help to clarify the goals of

the research.

1.2.2. The case study

A travel agent in Antwerp has a client who wants to go

to St.Tropez in France. There are rather a lot of possibilities for composing

such a voyage. The client can take the train to France, or he can take a bus or

train to Brussels and then the airplane to Nice in France, or the train to

Paris then the airplane or another train to Nice. The travel agent explains the

client that there are a lot of possibilities. During his explanation he gets an

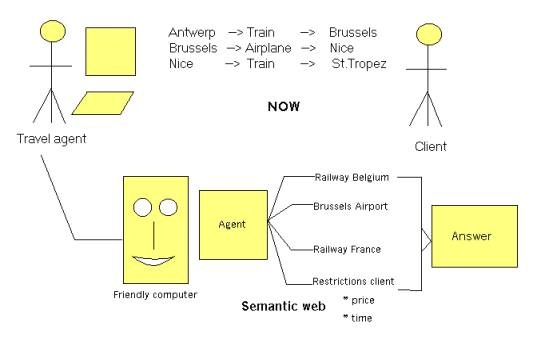

impression of what the client really wants. Fig.1.1. gives a schematic view of

the case study.

Fig.1.1. A Semantic

Web case study

He agrees with the client about the itinerary: by

train from Antwerp to Brussels, by airplane from Brussels to Nice and by train

from Nice to St. Tropez. This still leaves room for some alternatives. The

client will come back to make a final decision once the travel agent has said

him by mail that he has worked out some alternative solutions like price for

first class vs second class etc...

Remark that the decision for the itinerary that has

been taken is not very well founded; only very crude price comparisons have

been done based on some internet sites that the travel agent consulted during his

conversation with the client. A very cheap flight from Antwerp to Cannes has

escaped the attention of the travel agent.

The travel agent will now further consult the internet

sites of the Belgium railways, the Brussels airport and the France railways to

get some alternative prices, departure times and total travel times.

Now let’s compare this with the hypothetical situation

that a full blown Semantic Web would exist. In the computer of the travel agent

resides a Semantic Web agent that has at its disposal all the necessary

standard tools. The travel agent has a specialised interface to the general

Semantic Web agent. He fills in a query in his specialised screen. This query

is translated to a standardised query format for the Semantic Web agent. The agent

consult his rule database. This database of course contains a lot of rules

about travelling as well as facts like e.g. facts about internet sites where

information can be obtained. There are a lot of ‘path’ rules: rules for

composing an itinerary (for an example of what such rules could look like see:

[GRAPH]. The agent contacts different other agents like the agent of the

Belgium railways, the agents of the French railways, the agent of the airports

of Antwerp, Brussels, Paris, Cannes, Nice etc...

With the information recieved its inference rules

about scheduling a trip are consulted. This is all done while the travel agent

is chatting with the client to detect his preferences. After some 5 minutes the

Semantic Web agent gives the travel agent a list of alternatives for the trip;

now the travel agent can immediately discuss this with his client. When a

decision has been reached, the travel agent immediately gives his Semantic Web

agent the order for making the reservations and ordering the tickets. Now the

client only will have to come back once for getting his tickets and not twice.

The travel agent not only has been able to propose a cheaper trip as in the

case above but has also saved an important amount of his time.

1.2.3.Conclusions of the case study

That a realisation of such a system is interesting is

evident. Clearly, the standard tools do have to be very flexible and powerful

to be able to put into rules the reasoning of this case study (path

determination, itinerary scheduling). All this rules have then to be made by

someone. This can of course be a common effort for a lot of travel agencies.

What exists now? A quick survey learns that there are

web portals where a client can make reservations (for hotel rooms). However the

portal has to be fed with data by the travel agent. There also exist software

that permits the client to manage his travel needs. But all that software has

to be fed with information obtained by a variety of means, practically always

manually.

A partial simulation namely the command of a ticket

for a flight can be found in chapter 10 paragraph 8.

1.3.Research goals

1.3.1. Standards

In the case study different information sites have to

communicate one with another. If this has to be done in an automatic way a

standard has to be used. The standard that is used for expressing information

is RDF. RDF is a standard for expressing meta-information developed by the W3C.

The primary purpose was adding meta-information to web pages so that these

become more usable for computers [TBL]. Later the Semantic Web initiative was

launched by Berners-Lee with the purpose of developing standards for automating

the exchange of information between computers. The information standard is

again RDF [DESIGN]. However other standards are added on top of RDF for the

realisation of the Semantic Web (chapter 2).

1.3.2. Research tasks

The following research tasks were defined:

1)

define an inference process on top of RDF and give a

specification of this process in a

functional language.

In the case study the servers dispose of

information files that contain facts and rules. The facts are expressed in RDF.

The syntax of the rules is RDF also, but the semantic interpretation is

different. Using rules implies inferencing. On top of RDF a standardized inference

layer has to be defined. A functional language is very well suited for a

declarative specification. At certain points the inference process has to be

interrupted to direct queries to other sites on the World Wide Web. This

implies a process of distributed

inferencing where the inference process does not take place in one site

(one computer) but is distributed over several sites (see also fig. 1.1).

2)

Investigate which kind of logic is best suited for the

Semantic Web.

Using rules; making queries; finding

solutions; this is the subject of logic. So the relevance of logic for an

inferencing standard based on top of RDF has to be investigated.

3)

Give a proof of the validity and the completeness of

the specified inference process

It is without

question of course that the answers to a query have to be valid. By

completeness is meant that all answers to a query are found. It is not always necessary that all answers

are found but often it is.

4)

Investigate what can be done for augmenting the efficiency

of the inference process

A basic

internet engine should be fast because the amount of data can be high; it is

possible that data need to be collected from several sites over the internet.

This will already imply an accumulation of transmission times. A basic engine

should be optimized as much as possible.

5)

Investigate how inconsistencies can be handled by the

inference process

In a closed

world (reasoning on one computer system) inconsistencies can be mastered; on

the internet, when inferencing becomes a distributed process, inconsistencies

will be commonplace.

As of yet no standard is defined for inferencing on top of RDF. As will

be shown in this thesis there are a lot of issues involved and a lot of choices

to be made. The definition of such a standard is a necessary, but difficult

step in the progress of the Semantic Web. Therefore, in the research the accent

was put on points 1-3 where points 4 and 5 were treated less in depth.

In order to be able to define a standard a definition has to be provided

for following notions that represent minimal extensions on top of RDF: rules,

queries, solutions and proofs.

1.4. Research method

The

primary research method consists of writing a specification of an RDF inference

engine in Haskell. The engine is given the name RDFEngine. The executable

specification is tested using test cases where the collection of test cases of

De Roo [DEROO] plays an important role. Elements of logic, efficiency and

inconsistence can be tested by writing special test cases in interaction with a

study of the relevant literature.

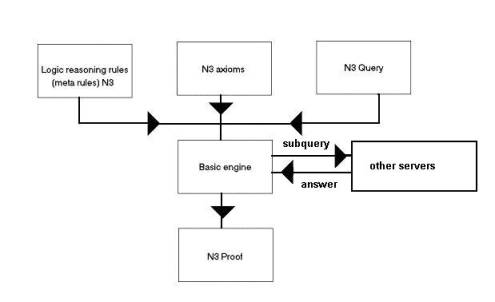

Fig.1.2. The inference engine RDFEngine in a server

with its inputs and outputs.

In fig.1.2 the global structure of the inference

engine is drawn. The inputs and outputs are in Notation 3 (N3). Notation 3 is

choosen because it is easier to work with than RDF and it is convertible to RDF

syntax. However, during the research, another syntax was developed, called

RDFProlog.

The engine is given a modular structure and different

versions exist for different testing purposes.

What has to be standardized is the input and output of

the block marked ‘Basic engine’ in fig.1.2. For the Semantic Web the precise

contents of this block will not make a part of the standard.

For making the Haskell specification the choice was made for a resolution based engine. A forward reasoning engine could have been chosen as well but a resolution based engine has better characteristics for distributed inferencing. Other choices of engine are also possible. The choice of a particular engine is not the subject of this thesis but rather the definition of the interface to the engine. However, interesting results that were obtained about resolution based inferencing are certainly worth mentioning.

1.5. The foundations

The research used mainly following material:

a) The collection of test cases of De Roo [DEROO]

b) The RDF model theory [RDFM] [RDFMS]

c) The RDF rules mail list [RDFRULES]

d) The classic resolution theory

[VANBENTHEM] [STANFORD]

e) Logic theories and mainly first order logic, constructive logic and

paraconsistent logic. [VANBENTHEM, STANFORDP]

1.6. Relation with the current research

The mail list RDF rules [RDFRULES] has as a

purpose to discuss the extension of RDF with inferencing features, mainly

rules, queries and answers. There are a lot of interesting discussions but no

clear picture is emerging at the moment.

Berners-Lee [DESIGN] seeks inspiration in first

order logic. He defines logic predicates (chapter 2), builtins, test cases and

a reference engine CWM. However the semantics of input and output formats for a

RDF engine are not clearly defined.

A common approach is to use existing logic

systems. RDF is then expressed in terms of these systems and logic (mostly

rules) is added on top e.g. Horn logic or frame logic. An example is found in

[DECKER]. In this case the semantics are those of the used logic.

The implementation of an ontology like OWL

(Ontology Web Language) implies also inferencing facilities that are necessary

for implementing the semantics of the ontology [OWL features].

Euler [DEROO] and CWM take a graph oriented

approach for the construction of the inference program. The semantics are

mostly defined by means of test cases.

The approach taken by my research for defining

the semantics of the inference input and output has two aspects:

1) It is graph oriented and, in fact, the theory is generally applicable to

labeled graphs.

2) From the logic viewpoint a constructive approach is taken for reasons of

verifiability (chapter 5).

3) A constructive graph oriented definition is given for rules, queries,

solutions and proofs.

1.7. Outline of the thesis

Chapter two gives an

introduction into the basic standards that are used by RDF and into the syntax

and model theory of RDF. Readers who are familiar with some of these matters can

skip these.

Chapter three exposes the meaning of inferencing using

an RDF facts and rules database. The Haskell specification, RDFEngine, is

explained and a theory of resolution based on reasoning on a graph is

presented.

Chapter four discusses existing software systems.

After the introduction to RDF and inferencing in chapter three, it is now

possible to highlight the most important aspects of existing softwares. The

Haskell specification RDFEngine and the theory presented in the thesis were not

based upon these softwares but upon the points mentionned in 1.5.

Chapter five explores in depth the relationship of RDF

inferencing with logic.

Here the accent is put on a constructive logic

interpretation of the graph theory exposed in chapter three.

Chapter six discusses optimalization techniques.

Especially important is a technique based on the theory exposed in chapter

three.

Chapter seven disusses inconsistencies that can arise

during the course of inferencing processes on the Semantic Web.

Chapter eight gives a brief overview of some use cases

and applications.

Chapter nine finally gives a conclusion and indicates

possible ways for further research.

Chapter 2. The building blocks

2.1.

Introduction

In this chapter a number of techniques are briefly

explained. These techniques are necessary building blocks for the Semantic Web.

They are also a necessary basis for inferencing on the web.

Readers

who are familiar with some topics can skip the relevant explanations.

2.2.XML

and namespaces

2.2.1. Definition

XML (Extensible Markup Language) is a subset of SGML (Standard General Markup

Language). In its original signification a markup language is a language which

is intended for adding information (“markup” information) to an existing

document. This information must stay

separate from the original hence the presence of separation characters. In SGML

and XML ‘tags’ are used.

2.2.2. Features

There are two kinds of tags: opening and closing tags.

The opening tags are keywords enclosed between the signs “<” and “>”. An

example: <author>. A closing tag

is practically the same, only the sign “/” is added e.g. </author>. With

these elements alone very interesting datastructures can be built. An example

of a book description:

<book>

<title>

The Semantic Web

</title>

<author>

Tim Berners-Lee

</author>

</book>

As can be seen it is quite easy to build hierarchical datastructures with these elements alone. A tag can have content too: in the example the strings “The Semantic Web” and “Tim Berners-Lee” are content. One of the good characteristics of XML is its simplicity and the ease with which parsers and other tools can be built.

The keywords in the tags can have attributes too. The

previous example could be written:

<book

title=”The Semantic Web” author=”Tim Berners-Lee”></book>

where attributes are used instead of tags.

XML is not a language but a meta-language i.e. a

language with as goal to make other languages (“markup” languages).

Everybody can make his own language using XML. A

person doing this only has to use the syntaxis of XML i.e. produce wellformed

XML. However more constraints can be added to an XML language by using DTD’s

and XML schema, thus producing valid XML documents. A valid XML document is one

that does not violate the constraints laid down in a DTD or XML schema. To

summarize: an XML language is a language that uses XML syntax and XML

semantics. The XML language can be defined using DTD’s or XML schema.

If everybody creates his own language then the

“tower-of-Babylon”-syndrome is looming. How is such a diversity in languages

handled? This is done by using namespaces.

A namespace is a reference to the definition of an XML language.

Suppose someone has

made an XML language about birds. Then he could make the following namespace

declaration in XML:

<birds:wing

xmlns:birds=”http://birdSite.com/birds/”>

This statement is referring to the tag “wing” whose description is to be found on the site that is indicated by the namespace declaration xmlns (= XML namespace). Now our hypothetical biologist might want to use an aspect of the physiology of birds described however in another namespace: <physiology:temperature xmlns:physiology=” http://physiology.com/xml/”> By the semantic definition of XML these two namespaces may be used within the same XML-object. <?xml version=”1.0” ?>

<birds:wing xmlns:birds=”http://birdSite.com/birds/”> large</birds:wing><physiology:temperature xmlns:physiology=” http://physiology.com/xml/”> 43</physiology:temperature>

2.3.URI’s and URL’s

2.3.1. Definitions

URI stands for Uniform Resource Indicator. A URI is anything that

indicates unequivocally a resource.

URL stands for Uniform Resource Locator. This is a subset of URI. A URL indicates the access to a resource. URN refers to a subset of URI and indicates names that must remain unique even when the resource ceases to be available. URN stands for Uniform Resource Name. [ADDRESSING]

In this thesis only URL’s will be used.

2.3.2. Features

The following example illustrates a URI format that is in common use.[URI]: www.math.uio.no/faq/compression-faq/part1.html Most people will recognize this as an internet address. It unequivocally identifies a web page. Another example of a URI is a ISBN number that unquivocally identifies a book.

The general format of an http URL is:

http://<host>:<port>/<path>?<searchpart>.

The host is of course the computer that contains the resource; the default port number is normally 80; eventually e.g. for security reasons it might be changed to something else; the path indicates the directory access path. The searchpart serves to pass information to a server e.g. data destinated for CGI-scripts.

When an URL finishes with a slash like http://www.test.org/definitions/ the directory definitions is addressed. This will be the directory defined by adding the standard prefix path e.g. /home/netscape to the directory name: /home/netscape/definitions.The parser can then return e.g. the contents of the directory or a message ‘no access’ or perhaps the contents of a file ‘index.html’ in that directory.

A path might include the sign “#” indicating a named anchor in an html-document. Following is the html definition of a named anchor:

<H2><A

NAME="semantic">The Semantic Web</A></H2>

A named anchor thus indicates a location within a document. The named anchor can be called e.g. by: http://www.test.org/definition/semantic.html#semantic

2.4.Resource Description Framework RDF

RDF is a language. The semantics are defined by [RDFM]; some concrete syntaxes are: XML-syntax, Notation 3, N-triples, RDFProlog. N-triples is a subset of Notation 3 and thus of RDF [RDFPRIMER]. RDFProlog is also a subset of RDF and is defined in this thesis.

Very basically RDF consist of triples: (subject, predicate, object). This simple statement however is not the whole story; nevertheless it is a good point to start.

An example [ALBANY] of a statement is:

“Jan Hanford created the J. S. Bach homepage.”.

The J.S. Bach homepage is a resource. This resource has a URI: http://www.jsbach.org/. It has a property:

creator with value ‘Jan Hanford’. Figure 2.1. gives a graphical view of this.

Creator>

</Description>

Fig.2.1. An example of a RDF relation.

In simplified RDF this is:

<RDF>

<Description about= “http://www.jsbach.org”>

<Creator>Jan Hanford</Creator>

</Description>

</RDF>

However this is without namespaces meaning that the notions are not well defined. With namespaces added this becomes:

<rdf:RDF

xmlns:rdf=”http://www.w3.org/1999/02/22-rdf-syntax-ns#”

xmlns:dc=”http://purl.org/DC/”>

<rdf:Description

about=”http://www.jsbach.org/”>

<dc:Creator>Jan

Hanford</dc:Creator>

</rdf:Description>

</rdf:RDF>

The first namespace xmlns:rdf=”http://www.w3.org/1999/02/22-rdf-syntax-ns#“

refers to the

document describing the (XML-)syntax of RDF; the second namespace xmlns:dc=”http://purl.org/DC/” refers

to the description of the Dublin Core, a basic ontology about authors and

publications. This is also an example of two languages that are mixed within an

XML-object: the RDF and the Dublin Core language.

In the

following example is shown that more than one predicate-value pair can be indicated for a resource.

<rdf:RDF

xmlns:rdf=”http://www.w3.org/1999/02/22-rdf-syntax-ns#”

xmlns:bi=”http://www.birds.org/definitions/”>

<rdf:Description

about= “http://www.birds.org/birds#swallow”>

<bi:wing>pointed</bi:wing>

<bi:habitat>forest</bi:habitat>

</rdf:Description>

</rdf:RDF>

Conclusion

What is RDF? It is a language with a simple semantics consisting of triples: (subject, predicate, object) and some other elements. Several syntaxes exist for RDF: XML, graph, Notation 3, N-triples, RDFProlog. Notwithstanding its simple structure a great deal of information can be expressed with it.

2.5.Notation 3

2.5.1. Introduction

Notation 3 is a syntax for RDF developed by Tim

Berners-Lee and Dan Connolly and represents a more human usable form of the

RDF-syntax with in principle the same semantics [RDF Primer]. Most of the test

cases used for testing RDFEngine are written in Notation 3. An alternative and

shorter name for Notation 3 is N3.

2.5.2. Basic properties

The basic

syntactical form in Notation 3 is a triple

of the form :

<subject> <verb> <object> where subject, verb and object are atoms. An atom can be either a URI (or a URI abbreviation) or a variable. But some more complex structures are possible and there also is some “ syntactic sugar”. Verb and object are also called property and value which is anyhow the semantical meaning.

In N3 URI’s can be indicated in a variety of

different ways. A URI can be indicated by its complete form or by an

abbreviation. These abbreviations have practical importance and are intensively

used in the testcases. In what follows the first item gives the complete

form; the others are abbreviations.

·

<http://www.w3.org/2000/10/swap/log#log:forAll> : this is the complete form of a URI in Notation 3. This

ressembles very much the indication of a tag in an HTML page.

·

xxx: : a label followed by a ‘:’

is a prefix. A prefix is defined in N3 by the @prefix instruction :

@prefix ont:

<http://www.daml.org/2001/03/daml-ont#>.

This defines the prefix ont: . After the label follows the URI represented by the prefix.

So ont:TransitiveProperty

is in full form <http://www.daml.org/2001/03/daml-ont#TransitiveProperty>

.

·

<#param> : Here only the tag in the HTML page is indicated. To get the complete

form the default URL must be added.the complete form is : <URL_of_current_document#param>.

The default URL is the URL of the current document i.e. the document that contains the description in Notation 3.

·

<> or <#>: this

indicates the URI of the current document.

·

: : a single double point is by convention

referring to the current document. However this is not necessarily so because

this meaning has to be declared with a prefix statement :

@prefix : <#> .

Two substantial abbreviations for sets of triples are property lists and

object lists. It can happen that a subject recieves a series of qualifications;

each qualification with a verb and an object,

e.g. :bird :color :blue;

height :high; :presence :rare.

This

represents a bird with a blue color, a high height and a rare presence.

These

properties are separated by a semicolon.

A verb or property can have several values e.g.

:bird :color :blue,

yellow, black.

This means that the bird has three colors. This

is called an object list. The two things can be combined :

:bird :color :blue,

yellow, black ; height :high ; presence :rare.

The objects in an objectlist are separated by a

comma.

A semantic and

syntactic feature are anonymous subjects.

The symbols ‘[‘ and ‘]’ are used for this feature. [:can :swim].

means there exists an anonymous subject x that can swim. The abbreviations for

propertylist and objectlist can here be used too :

[ :can :swim, :fly ; :color :yellow].

The property

rdf:type can be abbreviated as “a”:

:lion a :mammal.

really means:

:lion rdf:type :mammal.

2.6.The logic layer

In [SWAPLOG] an

experimental logic layer is defined for the Semantic Web. I will say a lot more

about logic in chapter 4. An overview of the most salient features (my engine

only uses log:implies, log:forAll, log:forSome and log:Truth):

log:implies : this is the implication, also indicated by an

arrow: à.

{:rat a :rodentia. :rodentia a :mammal.}à {:rat a :mammal}.

log:forAll : the purpose is to indicate universal variables :

{:rat a :rodentia. :rodentia a :mammal.}à {:rat a :mammal};

log:forAll :a, :b, :c.

indicates that :a, :b and :c are universal variables.

log:forSome: does the same for existential variables:

{:rat a :rodentia. :rodentia a :mammal.}à {:rat a :mammal}; log:forSome :a, :b, :c.

log:Truth : states that this is a universal truth. This is not interpreted by my engine.

Here follow briefly some other features:

log:falseHood

: to indicate that a formula is not true.

log:conjunction :

to indicate the conjunction of two formulas.

log:includes : F includes G means G follows from F.

log:notIncludes: F notIncludes G means G does not follow

from F.

2.7.Semantics

of N3

2.7.1. Introduction

The semantics of N3 are the same as the semantics of

RDF [RDFM].

The semantics indicate the correspondence between the

syntactic forms of RDF and the entities in the universum of discourse. The

semantics are expressed by a model theory. A valid triple is a triple whose elements are defined by the model

theory. A valid RDF graph is a set of

valid RDF triples.

2.7.2. The model theory of RDF

The vocabulary V

of the model is composed of a set of URI’s.

LV is

the set of literal values and XL is the mapping from the literals to LV.

A simple interpretation

I of a vocabulary V is defined by:

1. A non-empty set IR of

resources, called the domain or universe of I.

2. A mapping IEXT from IR into the powerset of IR x (IR union LV) i.e. the set of sets

of pairs <x,y> with x in IR

and y in IR or LV. This mapping

defines the properties of the RDF triples.

3. A mapping IS from V into IR

IEXT(x) is

a set of pairs which identify the arguments for which the property is true,

i.e. a binary relational extension, called the extension of x. [RDFMS]

Informally this means that every URI represents a resource which might

be a page on the internet but not necessarily: it might as well be a physical

object. A property is a relation; this relation is defined by an extension

mapping from the property into a set. This set contains pairs where the first

element of a pair represents the subject of a triple and the second element of

a pair represent the object of a triple. With this system of extension mapping

the property can be part of its own extension without causing paradoxes. This

is explained in [RDFMS].

2.7.3. Examples

Take the triple:

:bird :color :yellow.

In the set of URI’s there will be things like: :bird, :mammal, :color,

:weight, :yellow, :blue etc...These are part of the vocabulary V.

In the set IR of resources will be: #bird, #color etc.. i.e. resources

on the internet or elsewhere. #bird might represent e.g. the set of all birds.

These are the things we speak about.

There then is a mapping IEXT

from #color (resources are abbreviated) to the set

{(#bird,#blue),(#bird,#yellow),(#sun,#yellow),...}

and the mapping IS from V to

IR:

:bird à #bird, :color à #color, ...

The URI refers to a page on the internet where the domain IR is defined (and thus the semantic

interpretation of the URI).

2.8. A global view of the Semantic Web

2.8.1. Introduction.

The Semantic Web

will be composed of a series of

standards. These standards have to be organised into a certain structure that

is an expression of their interrelationships. A suitable structure is a

hierarchical, layered structure.

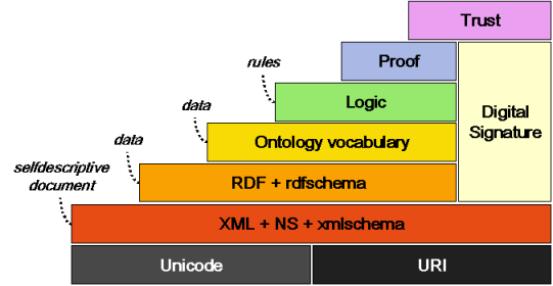

2.8.2. The layers of the Semantic Web

Fig.2.2. illustrates

the different parts of the Semantic Web in the vision of Tim Berners-Lee. The

notions are explained in an elementary manner here. Later some of them will be

treated more in depth.

Layer 1. Unicode and

URI

At the bottom there is Unicode and URI.

Unicode is the Universal code.

Fig.2.2. The layers

of the Semantic Web [Berners-Lee]

Unicode codes the characters of all the major languages in use today.[UNICODE].

Layer 2. XML,

namespaces and XML Schema

See 2.2. for a

description of XML.

Layer 3. RDF en

RDF Schema

The first two layers

consist of basic internet technologies. With layer 3 the Semantic Web begins.

RDF Schema (rdfs)

has as a purpose the introduction of some basic ontological notions. An example

is the definition of the notion “Class” and “subClassOf”.

Layer 4. The

ontology layer

The definitions of

rdfs are not sufficient. A more extensive ontological vocabulary is needed.

This is the task of the Web Ontology workgroup of the W3C who has defined

already OWL (Ontology Web Language) and OWL Lite (a subset of OWL).

Layer 5. The logic

layer

In the case study

the use of rules was mentioned. For expressing rules a logic layer is needed.

An experimental logic layer exists [SWAP/CWM]. This layer is treated in depth

in the following chapters.

Layer 6. The proof

layer

In the vision of Tim

Berners-Lee the production of proofs is not part of the Semantic Web. The

reason is that the production of proofs is still a very active area of research

and it is by no means possible to make a standardisation of this. A Semantic Web engine should only need to

verify proofs. Someone sends to site A a proof that he is authorised to use the

site. Then site A must be able to verify that proof. This is done by a suitable

inference engine. Three inference engines that use the rules that can be defined

with this layer are: CWM [SWAP/CWM] , Euler [DEROO] and RDFEngine developed as

part of this thesis.

Layer 7. The trust

layer

Without trust the

Semantic Web is unthinkable. If company B sends information to company A but

there is no way that A can be sure that this information really comes from B or

that B can be trusted then there remains nothing else to do but throw away that

information. The same is valid for exchange between citizens. The trust has to

be provided by a web of trust that is based on cryptographic principles. The

cryptography is necessary so that everybody can be sure that his communication

partners are who they claim to be and what they send really originates from

them. This explains the column “Digital Signature” in fig. 2.2.

The trust policy is

laid down in a “facts and rules” database. This database is used by an

inference engine like the inference engine I developed. A user defines his

policy using a GUI that produces a policy database. A policy rule might be e.g.

if the virus checker says ‘OK’ and

the format is .exe and it is signed by ‘TrustWorthy’ then accept this input.

The impression might

be created by fig. 2.2 that this whole layered building has as purpose to

implement trust on the internet. Indeed it is necessary for implementing trust

but, once the pyramid of fig. 2.2 comes into existence, on top of it all kind

of applications can be built.

Layer 8. The

applications

This layer is not in

the figure; it is the application layer that makes use of the technologies of

the underlying 7 layers. An example might be two companies A and B exchanging

information where A is placing an order with B.

Chapter 3. RDF, RDFEngine and inferencing.

3.1. Introduction

Chapter 3 will explain the meaning of

inferencing in connection with RDF. Two aspects will be interwoven: the theory

of inferencing on RDF data and the specification of an inference engine,

RDFEngine, in Haskell.

First a specification of a RDF graph will be

given. Then inferencing on a RDF graph will be explained. A definition will be

given for rules, queries, solutions and proofs. After discussing substitution

and unification, an inference step will be defined and an explanation will be

given of the data structure that is needed to perform a step. The notion of

distributed inferencing will be explained and the proof format that is

necessary for distributed inferencing.

The explanations will be illustrated with

examples and the relevant Haskell code.

RDFEngine possesses the characteristics of

monotonicity and completeness. This is demonstrated by a series of lemmas.

Note: The Haskell source code presented in the

chapter is somewhat simplified compared to the source code in appendix.

3.2.Recapitulation and definitions

I give a brief recapitulation of the most

important points about RDF [RDFM,RDF Primer, RDFSC].

RDF is a system for expressing meta-information

i.e. information that says something about other information e.g. a web page.

RDF works with triples. A triple is

composed of a subject, a property and an object. A triplelist is a

list of triples. Subject, property and object can have as value a URI, a

variable or a triplelist. An object can also be a literal. The property is also

often called predicate.

Triples are noted as: (subject, property, object). A triplelist consists of triples

between ‘[‘ and ‘]’, separated by a ‘,’.

In the graph representation subjects and

objects are nodes in the graph

and the arcs represent properties. No nodes can occur twice in the graph.

The consequence is that some triples will be connected with other triples;

other triples will have no connections. Also the graph will have different

connected subgraphs.

A node can also be an blank node which is a kind of anonymous node. A blank node can be instantiated with a URI or a literal and then becomes a ‘normal’

node.

A valid

RDF graph is a graph where all arcs are valid URI’s and all nodes are either valid URI’s, valid

literals, blank nodes or triplelists .

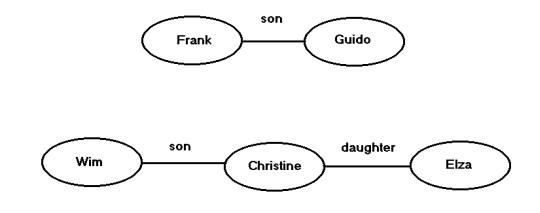

Fig.3.1 gives examples of triples.

Fig.3.1.

A RDF graph consisting of two connected subgraphs.

There are three triples in the graph of fig.

3.1: (Frank, son, Guido), (Wim, son, Christine), (Christine, daughter, Elza).

I give

here the Haskell representation of triples:

data Triple = Triple (Subject,

Predicate, Object) | TripleNil

type Subject = Resource

type Predicate = Resource

type Object = Resource

data Resource = URI String |

Literal String | Var Vare | TripleList | ResNil

type TripleList1 = [Triple]

Four kinds of variables are

defined:

UVar: Universal variable with local scope

EVar: Existential variable with local scope

GVar: Universal variable with global scope

GEVar: Existential variable

with global scope

-- the definition of a variable

data Vare = UVar String | EVar

String | GVar String | GEVar String

These four kinds of variables

are necessary as they occur in the test cases of [DEROO] that were used for

testing RDFEngine.

Suppose fig.3.1 represents a RDF graph. Now

lets make two queries. Intuitively a query is an interrogation of the RDG graph

to see if certain facts are true or not.

The first query is: [(Frank,son,Guido)].

The second query is: [(?who, daughter, Elza)].

This

needs some definitions:

A query is a triplelist. The triples composing the

query can contain variables. When the query does not contain variables it is an

RDF graph. When it contains variables, it is not an RDF raph because variables

are not defined in RDF and are thus an extension to RDF. When the inferencing in RDFEngine starts the

query becomes the goallist. The

goallist is the triplelist that has to be proved i.e. matched against the RDF

graph. This process will be described in detail later.

The answer to a query consists of one or more solutions. In a solution the variables

of the query are replaced by URI’s, when the query did have variables. If not,

a solution is a confirmation that the triples of the query are existing triples

(see also 2.7). I will give later a more precise definition of solutions.

A variable

will be indicated with a question mark. For this chapter it is assumed that the

variables are local universal variables.

A grounded

triple is a triple of which subject, property and object are URI’s or literals,

but not variables. A grounded triplelist contains only grounded triples.

A query can be grounded. In that case an affirmation

is sought that the triples in the query exist, but not necessarily in the RDF

graph: they can be deduced using rules.

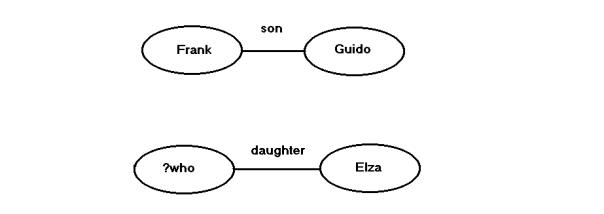

Fig.3.2 gives the representation of some

queries.

In the first query the question is: is Frank the son of Guido? The answer is

of course “yes”. In the second query the question is: who is a daughter of Elza?

Here the

query is a graph containing variables. This graph has to be matched with the

graph in fig.3.1. So generally for executing a RDF query what has to be done is

‘subgraph matching’.

Fig.5.2.

Two RDF queries. The first query does not contain variables.

The variables in a query are replaced in the

matching process by a URI or a literal. This replacement is called a substitution. A substitution is a list

of tuples (variable, URI) or (variable, literal)[1].

The list can be empty. I will come back on substitutions later.

A rule is a triple. Its subject is a triplelist

containing the antecedents and its

object is a triplelist containing the consequents.

The property or predicate of the rule is the URI :

http://www.w3.org/2000/10/swap/log# implies. [SWAP] . I will give following

notation for a rule:

{triple1,

triple2,…} => {triple11,triple21,…}

For

clarity, only rules with one consequent will be considered. The executable

version of RDFEngine can handle multiple consequents.

For a

uniform handling of rules and other triples the concept statement is introduced in the abstract Haskell syntax:

type

Statement = (TripleList, TripleList,String)

type

Fact = Statement -- ([],Triple,String)

type

Rule = Statement

In this terminology a rule is a statement. The

first triplelist of a rule is called the antecedents

and the second triplelist is called the consequents.

A fact is a rule with empty

antecedents. The string part in the statement is the provenance information. It indicates the origin of the statement.

The origin is the namespace of the

owner of the statement.

In a rule the order of the triples is relevant.

3.3. The graph ADT

A RDF

graph is a set of statements. It is represented in Haskell by an Abstract Data Type: RDFGraph.hs.

The implementation of the ADT is done by using

an array and a hashtable.

data

RDFGraph = RDFGraph (Array Int Statement, Hash, Params)

The statements of the graph are in an array.

The elements Hash and Params in the type RDFGraph constitute a

hashtable.

A

predicate is associated with each statement. This is the predicate of the first

triple of the second triplelist of the statement1.

An entry into the hashtable has as a key a predicate name and a list of

numbers. Each number refers to an entry in the array associated with the

RDFGraph.

Params is an array containing some parameters

for the ADT like the last entry into the array of statements.

Example: Given the triple (a,b,c) that is entry number 17 in the array, the

entry in the dictionary with key ‘b’ will give a list of numbers […,17,…]. The

other numbers will be triples or rules with the same predicate.

In this way all statements with the same

associated predicate can be retrieved at once. This is used when matching

triples of the query with the RDF graph.

The ADT is accessed by means of a mini-language defined in the module

RDFML.hs. This mini-language contains functions for manipulating triples,

statements and RDF Graphs. Fig. 3.3. gives an overview of the mini-language.

Through the encapsulation by the mini-language

this implementation of the ADT could be replaced by another one where only one

module would need to be changed. For the user of the module only the

mini-language is important[2].

Mini-language for triples, statements and graphs.

--

triple :: String -> String -> String -> Triple

-- make

a triple from three strings

--

triple s p o : make a triple

nil ::

Triple

--

construct a nil triple

nil =

TripleNil

tnil ::

Triple -> Bool

-- test

if a triple is a nil triple

tnil t

| t == TripleNil = True

| otherwise = False

s ::

Triple -> Resource

-- get

the subject of a triple

s t = s1

where Triple (s1,_,_) = t

p ::

Triple -> Resource

-- get

the predicate from a triple

p t = s1

where Triple (_,s1,_) = t

o ::

Triple -> Resource

-- get

the object from a triple

o t = s1

where Triple (_,_,s1) = t

st ::

TripleSet -> TripleSet -> Statement

--

construct a statement from two triplesets

-- -1

indicates the default graph

st ts1 ts2

= (ts1,ts2,"-1")

gmpred

:: Statement -> String

-- get

the main predicate of a statement

-- This

is the predicate of the first triple of the consequents

gmpred

st

| (cons st) == [] = ""

| otherwise = pred

where t = head(cons st)

pred = grs(p t)

stnil ::

Statement

--

return a nil statement

stnil =

([],[],"0")

tstnil

:: Statement -> Bool

-- test

if the statement is a nil statement

tstnil

st

| st == ([],[],"0") = True

| otherwise = False

trule ::

Statement -> Bool

-- test

if the statement is a rule

trule

([],_,_) = False

trule st

= True

tfact ::

Statement -> Bool

-- test

if the statement st is a fact

tfact

([],_,_) = True

tfact st

= False

stf ::

TripleSet -> TripleSet -> String -> Statement

--

construct a statement where the Int indicates the specific graph.

--

command 'st' takes as default graph 0

stf ts1

ts2 s = (ts1,ts2,s)

protost

:: String -> Statement

--

transforms a predicate in RDFProlog format to a statement

-- example: "test(a,b,c)."

protost

s = st

where [(cl,_)] = clause s

(st, _, _) = transClause (cl, 1,

1, 1)

sttopro

:: Statement -> String

--

transforms a statement to a predicate in RDFProlog format

sttopro

st = toRDFProlog st

sttppro

:: Statement -> String

--

transforms a statement to a predicate in RDFProlog format with

--

provenance indication (after the slash)

sttppro

st = sttopro st ++ "/" ++ prov st

ants ::

Statement -> TripleSet

-- get

the antecedents of a statement

ants

(ts,_,_) = ts

cons :: Statement

-> TripleSet

-- get

the consequents of a statement

cons

(_,ts,_) = ts

prov ::

Statement -> String

-- get

the provenance of a statement

prov

(_,_,s) = s

fact ::

Statement -> TripleSet

-- get

the fact = consequents of a statement

fact

(_,ts,_) = ts

tvar ::

Resource -> Bool

-- test

whether this resource is a variable

tvar

(Var v) = True

tvar r =

False

tlit ::

Resource -> Bool

-- test

whether this resource is a literal

tlit

(Literal l) = True

tlit r =

False

turi ::

Resource -> Bool

-- test

whether this resource is a uri

turi

(URI r) = True

turi r =

False

grs ::

Resource -> String

-- get

the string value of this resource

grs r =

getRes r

gvar ::

Resource -> Vare

-- get

the variable from a resource

gvar (Var v) = v

graph ::

TS -> Int -> String -> RDFGraph

-- make

a numbered graph from a triple store

-- the

string indicates the graph type

-- the

predicates 'gtype' and 'gnumber' can be queried

graph ts

n s = g4

where changenr nr (ts1,ts2,_) =

(ts1,ts2,intToString nr)

g1 = getRDFGraph (map (changenr

n) ts) s

g2 = apred g1

("gtype(" ++ s ++ ").")

g3@(RDFGraph (array,d,p)) =

apred g2 ("gnumber(" ++

intToString n ++ ").")

([],tr,_) = array!1

array1 =

array//[(1,([],tr,intToString n))]

g4 = RDFGraph (array1,d,p)

agraph

:: RDFGraph -> Int -> Array Int RDFGraph -> Array Int RDFGraph

-- add a

RDF graph to an array of graphs at position n.

-- If

the place is occupied by a graph, it will be overwritten.

--

Limited to 5 graphs at the moment.

agraph g n

graphs = graphs//[(n,g)]

-- maxg defines the maximum

number of graphs

maxg :: Int

maxg = 3

statg :: RDFGraph -> TS

-- get all statements from a

graph

statg g = fromRDFGraph g

pgraph :: RDFGraph -> String

-- print a RDF graph in

RDFProlog format

pgraph g = tsToRDFProlog

(fromRDFGraph g)

pgraphn3 :: RDFGraph ->

String

-- print a RDF graph in Notation

3 format

pgraphn3 g = tsToN3

(fromRDFGraph g)

cgraph :: RDFGraph -> String

-- check a RDF graph. The string

contains first the listing of the original triple

-- store in RDF format and then

all statements grouped by predicate. The two listings

-- must be identical

cgraph g = checkGraph g

apred :: RDFGraph -> String

-> RDFGraph

-- add a predicate of arity

(length t). g is the rdfgraph, p is the

-- predicate (with ending dot).

apred g p = astg g st

where st = protost p

astg :: RDFGraph -> Statement

-> RDFGraph

-- add statement st to graph g

astg g st = putStatementInGraph

g st

dstg :: RDFGraph -> Statement

-> RDFGraph

-- delete statement st from the

graph g

dstg g st = delStFromGraph g st

gpred :: RDFGraph -> Resource

-> [Statement]

-- get the list of all

statements from graph g with predicate p

gpred g p = readPropertySet g p

gpvar :: RDFGraph ->

[Statement]

-- get the list of all

statements from graph g with a variable predicate

gpvar g = getVariablePredicates

g

gpredv :: RDFGraph ->

Resource ->[Statement]

-- get the list of all

statements from graph g with predicate p

-- and with a variable predicate.

gpredv g p = gpred g p ++ gpvar

g

checkst :: RDFGraph ->

Statement -> Bool

-- check if the statement st is

in the graph

checkst g st

| f == [] = False

| otherwise= True

where list = gpred g (p (head (cons st)))

f = [st1|st1 <- list, st1 == st]

changest :: RDFGraph ->

Statement -> Statement -> RDFGraph

-- change the value of a

statement to another statement

changest g st1 st2 = g2

where list = gpred g (p (head (cons st1)))

(f:fs) = [st|st <- list, st == st1]

g1 = dstg g f

g2 = astg g st2

setparam :: RDFGraph ->

String -> RDFGraph

-- set a parameter. The

parameter is in RDFProlog format (ending dot

-- included.) . The predicate

has only one term.

setparam g param

| fl == [] = astg g st

| otherwise = changest g f st

where st = protost param

fl = checkparam g param

(f:fs) = fl

getparam :: RDFGraph ->

String -> String

-- get a parameter. The

parameter is in RDFProlog format.

-- returns the value of the

parameter.

getparam g param

| fl == [] = ""

| otherwise = grs (o (head (cons f)))

where st = protost param

fl = checkparam g param

(f:fs) = fl

checkparam :: RDFGraph ->

String -> [Statement]

-- check if a parameter is

already defined in the graph

checkparam g param

| ll == [] = []

| otherwise = [l1]

where st = protost param

list = gpred g (p (head (cons st)))

ll = [l|l <-list, length (cons l) == 1]

(l1:ll1) = ll

assert :: RDFGraph ->

Statement -> RDFGraph

-- assert a statement

assert g st = astg g st

retract :: RDFGraph ->

Statement -> RDFGraph

-- retract a statement

retract g st = dstg g st

asspred :: RDFGraph -> String

-> RDFGraph

-- assert a predicate

asspred g p = assert g (protost

p)

retpred :: RDFGraph -> String

-> RDFGraph

--

retract a statement

retpred

g p = retract g (protost p)

Fig. 3.3. The mini-language for manipulating

triples, statements and RDF graphs.

3.4.Languages

needed for inferencing

Four languages are needed for inferencing

[HAWKE] . These languages are needed for handling:

1) facts

2) rules

3) queries

4) results

These are four data structures and using them

in inference implies using different operational procedures for each of the

languages. This is also the case in RDFEngine.

These languages can (but must not) have the

same syntax but they cannot have the same semantics. The language for facts is

based on the RDF data model. Though rules have the same representation (they

can be represented by triples and thus RDF syntax, with variables as an

extension ), their semantic interpretation is completely different from the

interpretation of facts.

The query language presented in this thesis is

a rather simple query language, for a standard engine on the World Wide Web

something more complex will be needed. Inspiration can be sought in a language

like SQL.

For representing results in this thesis a very

simple format is proposed in 3.10.

3.5.The model interpretation of a rule

A valid RDF graph is a graph where all nodes are either

blank nodes or labeled with a URI or constituted by a triplelist. All arcs are

labeled with valid URIs.

A number

of results from the RDF model theory [RDFM] are useful .

Subgraph Lemma. A graph entails1

all its subgraphs.

Instance Lemma. A graph is entailed by any of its instances.

An

instance of a graph is another graph where one or more blank nodes of the

previous graph are replaced by a URI or a literal.

Merging Lemma. The

merge2 of a set S of RDF graphs is entailed by

S and entails every member of S.

Monotonicity Lemma.

Suppose S is a subgraph of S’ and S entails E. Then S’ entails E.

These

lemmas establish the basic ways of reasoning on a graph. One basic way of

reasoning will be added: the implication. The implication, represented in

RDFEngine by a rule, is defined as follows:

Given the

presence of subgraph SG1 in graph G and the implication:

SG1 à SG2

the

subgraph SG2 is merged with G giving G’ and if G is a valid graph then G’ is a

valid graph too.

Informally,

this is the merge of a graph SG2 with the graph G giving G’ if SG1 is a

subgraph of G.

The

closure procedure used for axiomatizing RDFS in the RDF model theory [RDFM]

inspired me to give an interpetation of rules based on a closure process.

Take the following rule R describing the

transitivity of the ‘subClassOf’ relation:

{( ?c1,

subClassOf, ?c2),( ?c2, subClassOf, ?c3)} implies {(?c1,

subClassOf , ?c3)}.

The rule

is applied to all sets of triples in the graph G of the following form:

{(c1,

subClassOf, c2), (c2, subClassOf, c3)}

yielding a triple (c1, subClassOf, c3).

This last

triple is then added to the graph. This process continues till no more triples

can be added. A graph G’ is obtained called the closure of G with respect to

the rule set R1. In the ‘subClassOf’

example this leads to the transitive closure of the subclass-relation.

If a

query is posed: (cx, subClassOf, cy) then the answer is positive if this triple is

part of the closure G’; the answer is negative if it is not part of the

closure.

When variables are used in the query:

(?c1,

subClassOf, ?c2)

then the

solution consists of all triples (subgraphs) in the closure G’ with the

predicate ‘subClassOf’.

The

process of matching the antecedents of the rule R with a graph G and then

adding the consequent to the graph is called applying the rule R to the graph G.

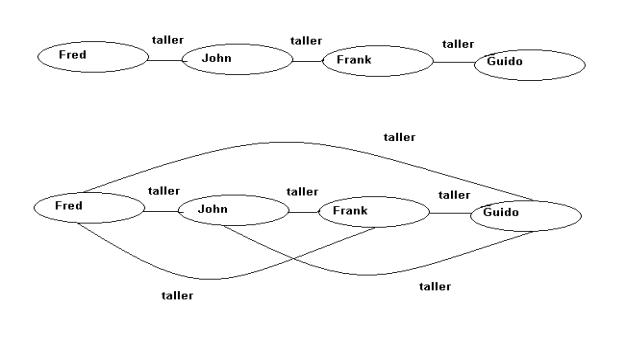

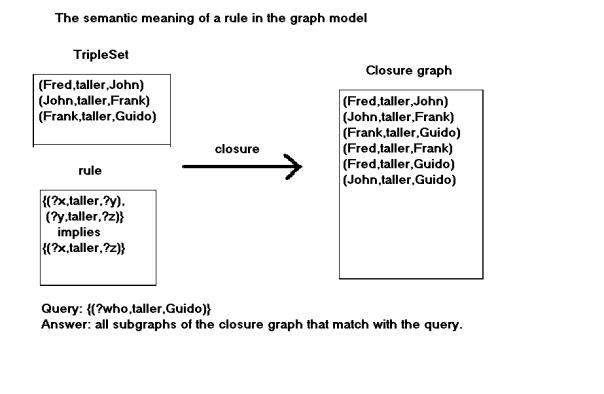

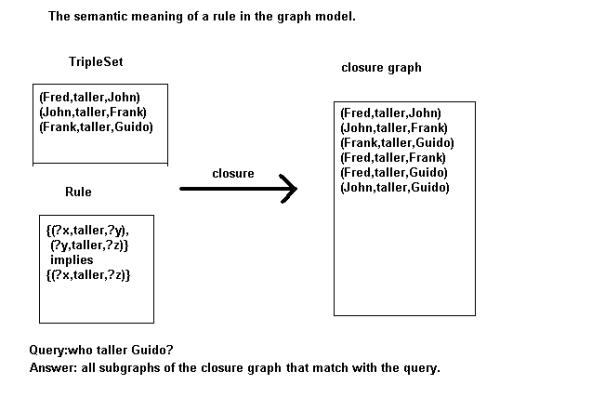

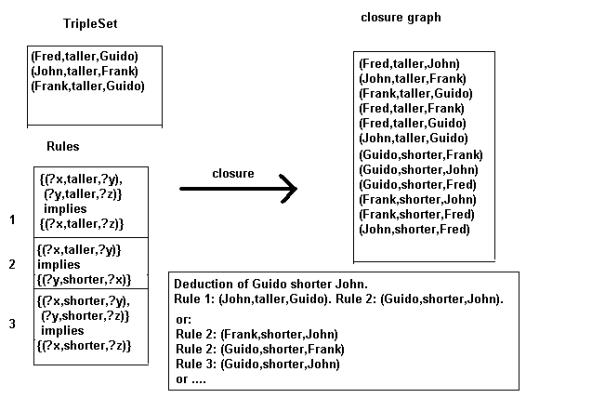

The process is illustrated in fig. 3.4 and 3.5

with a similar example.

When more than one rule is used the closure G’ will be obtained by the repeated

application of the set of rules till no more triples are produced.

Any solution obtained by a resolution process

is then a valid solution if it is a subgraph of the closure obtained.

I will not enter into a detailed comparison

with first order resolution theory but I want to mention following point:

the

closure graph is the equivalent of a minimal Herbrand model in first order

resolution theory or a least fixpoint in fixpoint semantics.[VAN BENTHEM]

Fig.3.4.

A graph with some taller relations

and a graph representing the transitive

closure of the first graph with respect to the relation taller.

Fig. 3.5.

The closure G’ of a graph G with respect to the given rule set.

The possible answers to the query are: (Frank,taller,Guido),

(Fred,taller,Guido), (John,taller,Guido).

3.6.Unifying

two triplesets

3.6.1. Example

Fig.3.6

gives a schematic view of multiple matches of a triple with a triplelist. The

triple (Bart, son, ?w) unifies with

two triples (Bart, son, Jan) and (Bart, son, Michèle). The triple (?w, son, Paul) matches with (Guido, son, Paul) and (Jan, son, Paul). This gives 4 possible

combinations of matching triples. Following the theory of resolutions all those

combinations must be contradicted. In graph terms: they have to match with the

closure graph. However, the common variable ?w

between the first and the second triple of triple set 1 is a restriction. The

object of the first triple must be the same as the subject of the second triple

with as a consequence that there is one solution: ?w = Jan. Suppose the second

triple was: (?w1, son, Paul). Then

this restriction does not any more apply and there are 4 solutions:

{(Bart, son, Jan),(Guido, son, Paul)}

{(Bart, son, Jan), (Jan, son, Paul)}

{(Bart, son, Michèle), (Guido, son, Paul)}

{(Bart, son, Michèle), (Jan, son, Paul)}

This example shows that when unifying two triplelists the product of all the alternatives has to be taken while the substitution has to be propagated from one triple to the next for the variables that are equal and also within a triple if the same variable or node occurs twice in the same triple.

3.6.2. Definition

Let T1 and T2 be triplelists containing only

facts and T1 can contain also global, existential variables1.

Let Q be the graph represented by triplelist T1 and G the graph represented by

triplelist T2.

The unification of T1 and T2 is the set S of

subgraphs of G where each element Si of S is isomorph with a graph Q’ and each

Q’ is equal to Q with the variables of Q replaced by labels from Si.

It will

be shown that this unification can be implemented by a resolution process with

backtracking.

Fig.3.6.

Multiple matches within one triplelist.

Fig.3.6.

Multiple matches within one triplelist.

3.7.Unification

A triple

consists of a subject, a property and an object. Unifying two triples means

unifying the two subjects, the two properties and the two objects. A variable

unifies with a URI or a literal1. Two

URI’s unify if they are the same. The result of a unification is a

substitution. A substitution is either a list of tuples (variable, URI or

Literal). The list can be empty. The result of the unification of two triples

is a substitution (containing at most 3 tuples). Applying a substitution to a

variable in a triple means replacing the variable with the URI or literal

defined in the corresponding tuple.

type ElemSubst = (Var, Resource)

type Subst = [ElemSubst]

A nil

substitution is an empty list.

nilsub :: Subst

nilsub = []

The

unification of two statements is given in fig. 3.7.

The application

of a substitution is given in fig. 3.8.

-- appESubstR applies an

elementary substitution to a resource

appESubstR :: ElemSubst ->

Resource -> Resource

appESubstR (v1, r) v2

| (tvar v2) && (v1 == gvar v2) = r

| otherwise = v2

-- applyESubstT applies an

elementary substitution to a triple

appESubstT :: ElemSubst ->

Triple -> Triple

appESubstT es TripleNil =

TripleNil

appESubstT es t =

Triple (appESubstR es (s t), appESubstR es (p t), appESubstR es

(o t))

-- appESubstSt applies an

elementary substitution to a statement

appESubstSt :: ElemSubst ->

Statement -> Statement

appESubstSt es st = (map

(appESubstT es) (ants st),

map (appESubstT es) (cons st),

prov st)

-- appSubst applies a

substitution to a statement

appSubst :: Subst ->

Statement -> Statement

appSubst [] st = st

appSubst (x:xs) st = appSubst

xs (appESubstSt x st)

-- appSubstTS applies a substitution to a triple store

appSubstTS :: Subst -> TS

-> TS

appSubstTS subst ts = map

(appSubst subst) ts

Fig.3.7. The application of a

substitution. The Haskell source code uses the mini-language defined in

RDFML.hs

In the application of a

substitution the variables in the datastructures are replaced by resources

whenever appropriate.

3.8.Matching

two statements

The Haskell source

code in fig. 3.8. gives the unification

functions.

-- type Match = (Subst, Goals, BackStep)

-- type BackStep = (Statement, Statement)

-- unify two statements

unify :: Statement -> Statement -> Maybe

Match

-- unify two statements

unify :: Statement -> Statement -> Maybe

Match

unify st1@(_,_,s1) st2@(_,_,s2)

|

subst == [] = Nothing

|

(trule st1) && (trule st2) = Nothing

|

trule st1 = Just (subst, transTsSts (ants st1) s1, (st2,st1))

|

trule st2 = Just (subst, transTsSts

(ants st2) s2, (st2,st1))

|

otherwise = Just (subst, [stnil], (st2,st1))

where subst = unifyTsTs (cons st1) (cons st2)

-- unify two triplelists

unifyTsTs :: TripleList -> TripleList ->

Subst

unifyTsTs ts1 ts2 = concat res1

where res = elimNothing

[unifyTriples t1 t2|t1 <- ts1, t2 <-

ts2]

res1 = [sub |Just sub <- res]

unifyTriples

:: Triple -> Triple -> Maybe Subst

unifyTriples

TripleNil TripleNil = Nothing

unifyTriples t1 t2 =

if (subst1 == Nothing) || (subst2 == Nothing)

|| (subst3 ==

Nothing) then Nothing

else Just (sub1 ++ sub2 ++ sub3)

where subst1 = unifyResource (s t1) (s

t2)

subst2 = unifyResource (p t1) (p t2)

subst3 = unifyResource (o t1) (o t2)

Just sub1 = subst1

Just sub2 = subst2

Just sub3 = subst3

-- transform a tripleset to a list of statements

transTsSts :: [Triple] -> String ->

[Statement]

transTsSts ts s = [([],[t],s)|t <- ts]

unifyResource :: Resource -> Resource ->

Maybe Subst

unifyResource r1 r2

|(tvar

r1) && (tvar r2) && r1 == r2 =

Just [(gvar r1, r2)]

| tvar r1 = Just [(gvar r1, r2)]

| tvar r2 = Just [(gvar r2, r1)]

|

r1 == r1 = Just []

|

otherwise = Nothing

Fig. 3.8. The

unification of two statements

When matching a

statement with a rule, the consequents of the rule are matched with the

statement. This gives a substitution. This substitution is returned together

with the antecedents of the rule and a tuple formed by the statement and the

rule. The tuple is used for a history of the unifications and will serve to

produce a proof when a solution has been found.

The antecedents of

the rule will form new goals to be proven in the inference process.

An example:

Facts:

(Wim, brother, Frank)

(Christine, mother, Wim)

Rule:

[(?x, brother, ?y), (?z, mother, ?x)] implies

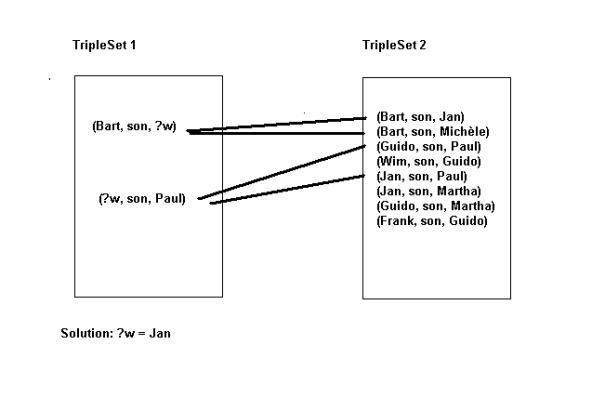

[(?z, mother, ?y)]